Project Description

MARKET NEED

MARKET NEED

All industries are now collecting large volumes of data from diverse sources. However, when developing a predictive analytics application it is challenging to know how best to utilize these different data modalities.

Deep Learning has become the mainstream machine learning method due to its impressive performance in many applications. However, multimodal deep learning research currently focuses on only very specific settings.

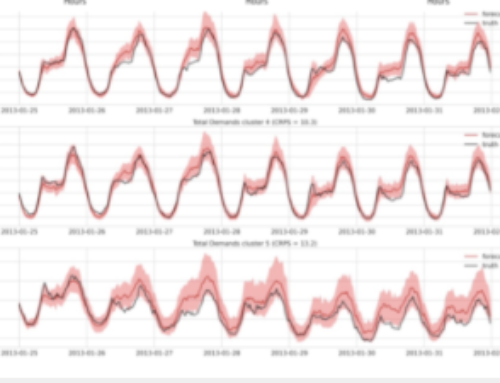

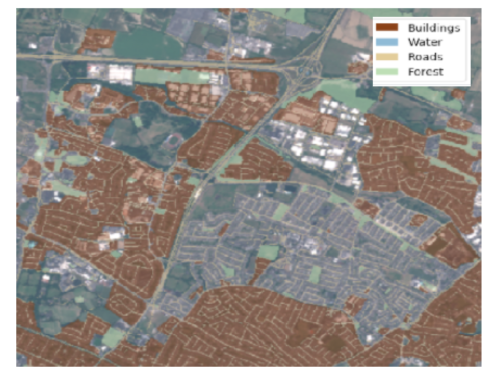

The project explores the use of deep learning to integrate heterogeneous data sources in a single predictive model for better performance.

TECHNOLOGY SOLUTION

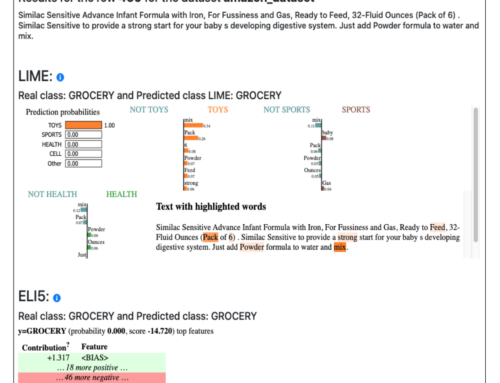

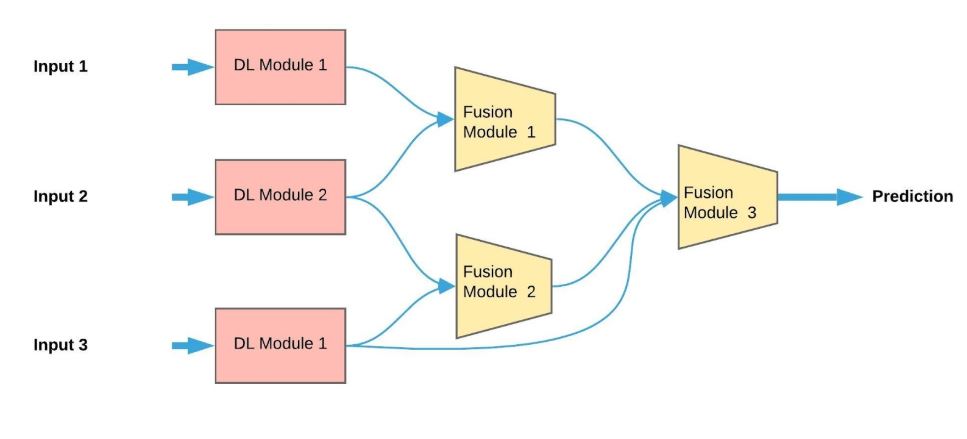

We implemented a multimodal deep learning library:

- That allows users to create multi-input deep learning models using a plug and play approach, for any type of data.

- Can be extended easily by new modules implemented by users

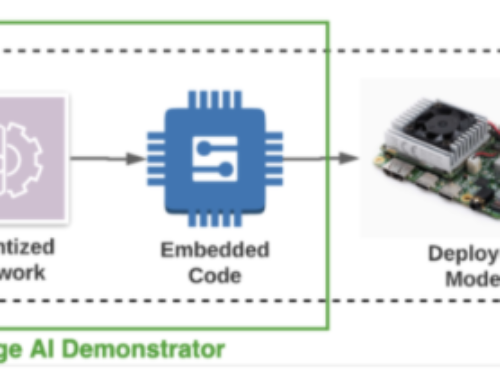

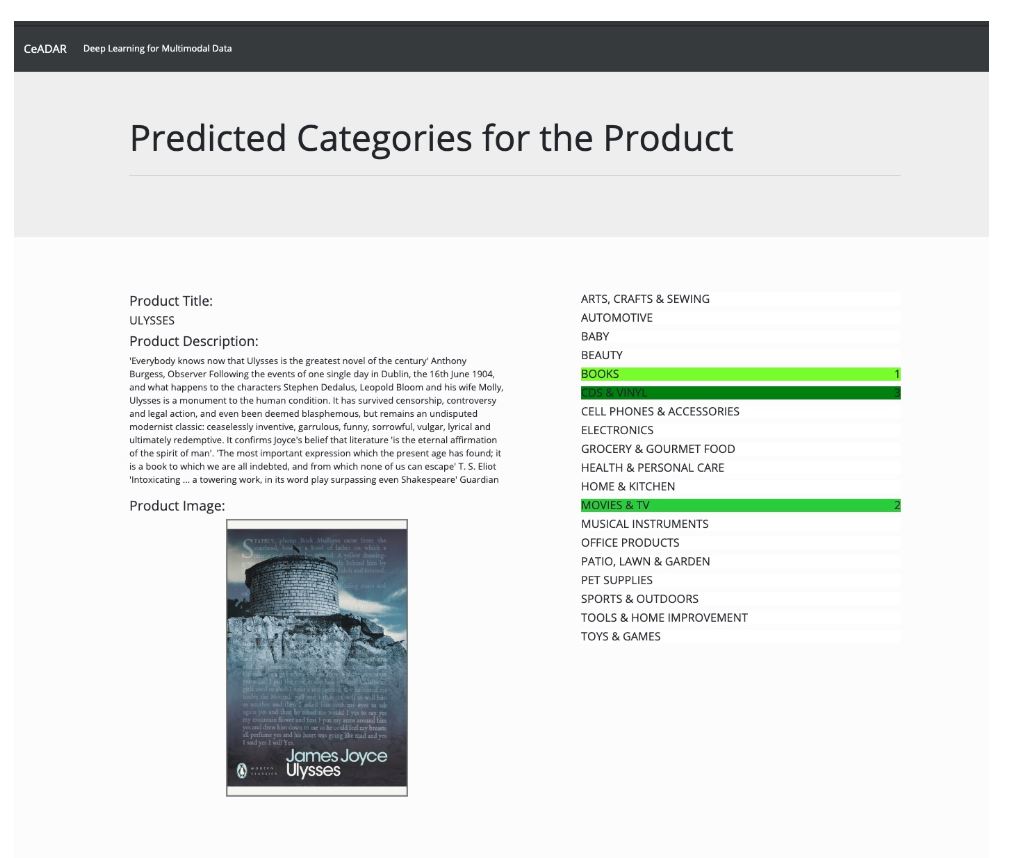

To show how our multimodal library works, a demonstrator was developed for the retail product categorization problem:

APPLICABILITY

Multiple sources of inputs can help models to be more accurate and robust. Multimodal deep learning can be applied across many industries, including:

● Healthcare: medical imagery, sensor readings, text reports

● Finance: transactions, text documents

● Predictive Maintenance: sensor data, weather predictions, status logs

● Mobile Communications: contract data, call frequency time series

RESEARCH TEAM

Dr. Quan Le, University College Dublin

Dr. Oisin Boydell, University College Dublin